The View | Wealth advisers are doomed in our new artificial intelligence-enabled world

‘Humans are supposed to be the most sophisticated data-processing system, but this is no longer the case as AI ascends’

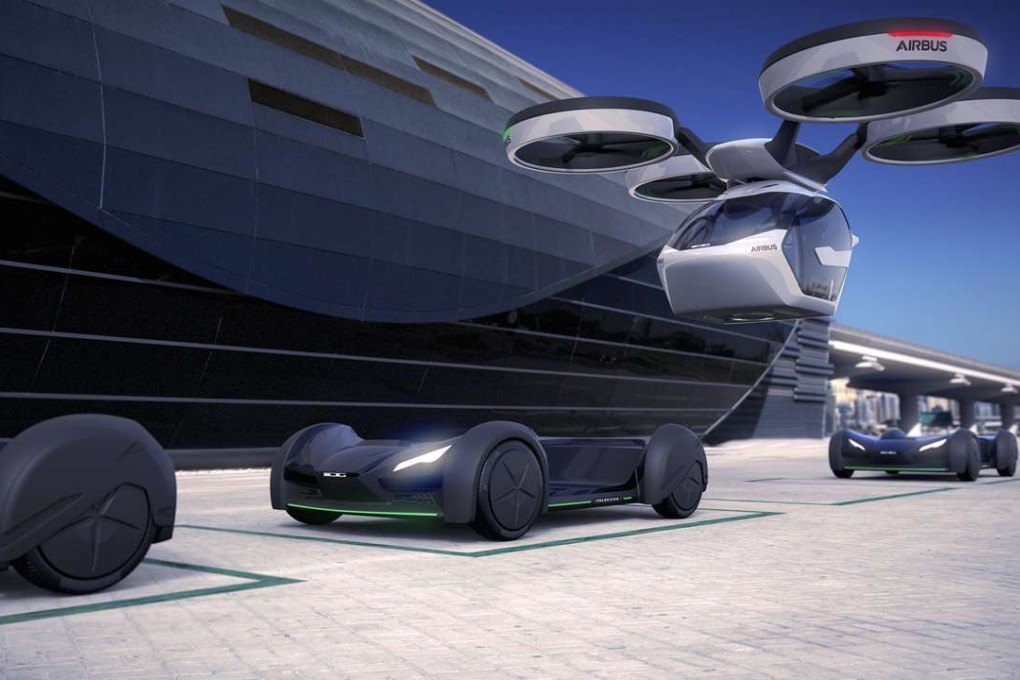

Driverless cars and the technology behind them – artificial intelligence (AI) – may not actually arrive and herald a new world.

Past revolutionary visions of technology were based in the industrial and post industrial revolutions. The issues of the day were how to use new technologies like electricity, radio and computers. Today, we are shifting moral authority to complex algorithms and losing the ability to find our way.

Artificial intelligence, under today’s technological limits, cannot surmount “the trolley problem”, according to a study by Azim Shariff, an assistant professor of psychology at the University of Oregon and director of the Culture and Morality Lab at the University of California, Irvine. There are several scenarios based on the trolley accident problem.

If AI is to play a major role in our lives it will have to be able to make choices that represent the dangerous, if not lethal, intersection between morality and technology

My favourite is: a self-driving car carrying a family of four on a mountain road spots a bouncing ball ahead of them. Then, a child runs out into the road to retrieve the ball. The car’s algorithm must quickly decide if the car should risk its passengers’ lives by swerving to the side – where the edge of the road leads off a steep cliff? Or should the driverless car stay on course, thereby ensuring its passengers’ safety, but hitting the child?

This scenario and many others pose moral and ethical dilemmas that car makers, car buyers and regulators must address before vehicles should be given full autonomy, according to a study published recently in Science magazine.

An AI designer watching the events unfold must have programmed the ability to make a moral choice between an intervention that sacrifices one person for the good of the group or one that protects an individual at the expense of the group.

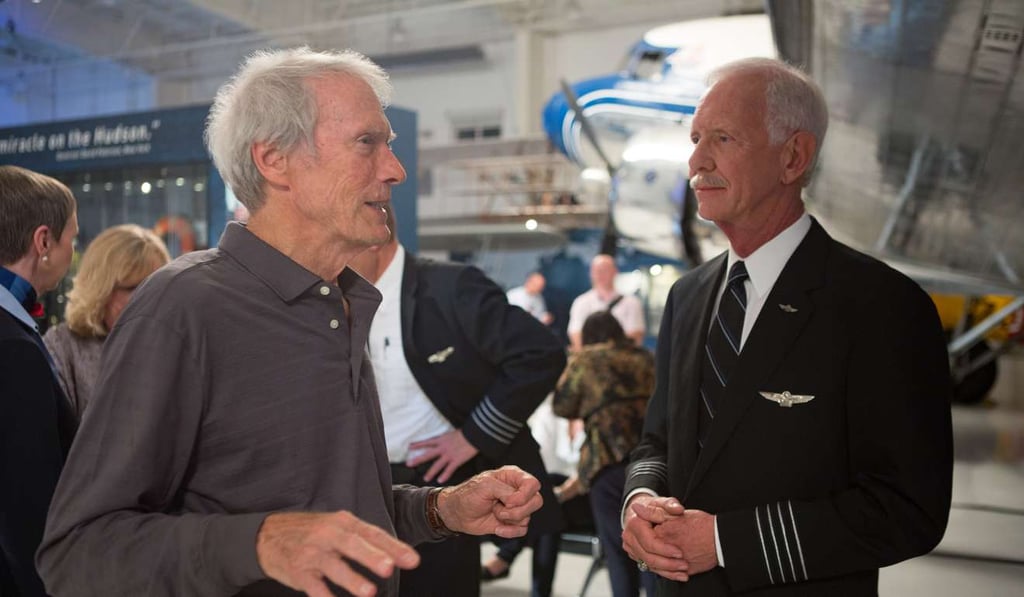

The most challenging AI dilemma can be derived from the 2016 movie, Sully, where the commercial pilot Chesley Sullenberger must make an instinctive snap decision based on decades of flight experience to save the passengers and land his damaged plane on New York’s Hudson River instead of returning to the airport.