Debate over artificial intelligence raises moral, legal and technical questions

Debate over artificial intelligence raises moral, legal and technical questions related to today's 'smart' systems

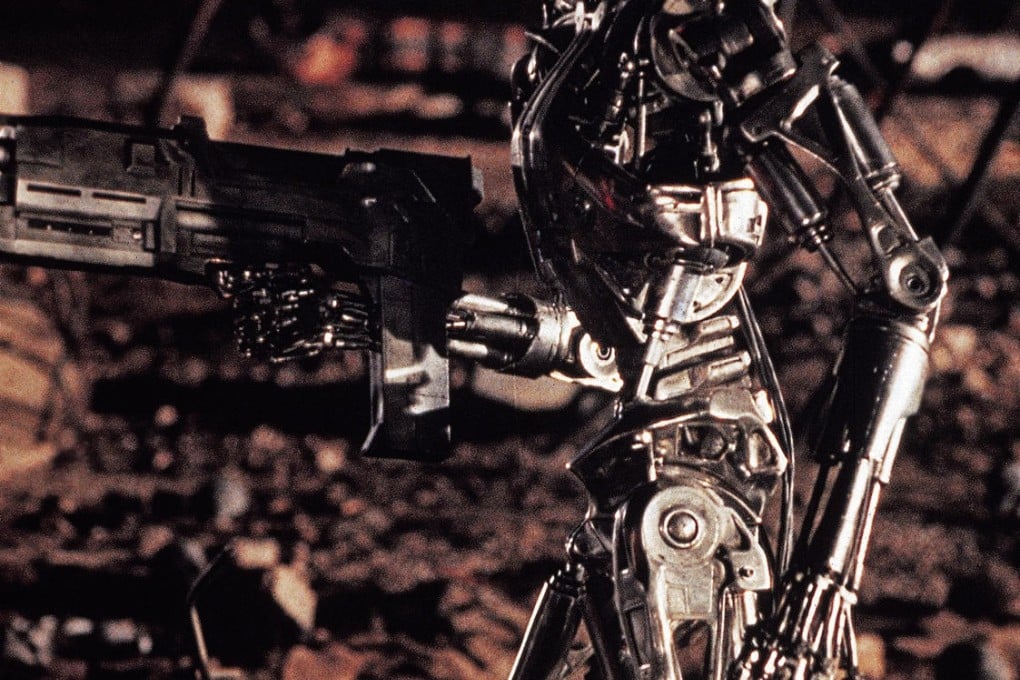

We all know about the Skynet scenario. It was the background story to the popular sci-fi Terminator film series in which a fully autonomous artificial intelligence (AI) system used by the US military ended up launching a global thermonuclear war to eliminate humanity and take over the world.

"It saw all humans as a threat; not just the ones on the other side," a character from the series helpfully explained. "It decided our fate in a microsecond: extermination."

Early this month, a group of prominent scientists, entrepreneurs and investors in the field of artificial intelligence, including physicist Stephen Hawking, billionaire businessman Elon Musk and Frank Wilczek, a Nobel laureate in physics, published an open letter warning against the potential dangers as well as benefits of AI.

Given the calibre of the people involved, the letter has generated extensive media coverage, and even lively debate among the cognoscenti. Much of the debate naturally focuses on the more sensational warnings contained in the letter and in select passages from the position paper that accompanied it.

Can AI systems become an existential threat to the human race? Reponses range over a whole spectrum. Some pundits follow the position of the distinguished American philosopher John Searle, who has questioned if the strong version of AI - that is, machines that can learn from experience and model behaviour like a human brain, except with incomparably greater computing powers and therefore cognitive abilities - is even possible at all.

Others, like science writer Andrew McAfee, argue strong AI is possible but we are still a long way from it to need to worry about it seriously.