Companies are hacking facial recognition to make it safer

- Facial recognition testing companies like Qingfei are producing sophisticated 3D-printed faces and even robots to reveal the technology’s vulnerabilities

Facial recognition is seemingly used for everything these days: Bank accounts, passwords, taking flights, entering offices, and ubiquitous public cameras with AI systems. But what happens when these systems fail us?

Last December, a company called Kneron went around the world trying to break into facial recognition systems used to authenticate people at airports, railway stations and even on payment apps. As it turned out, many of them could be fooled, including self check-in terminals at the Netherlands’ largest airport, where the team tricked a sensor just by using a photo displayed on a smartphone.

Companies like Kneron aren’t here to steal your identity, though. This is all part of a process that keeps your face safe from hackers. It’s called a presentation attack, and it’s a type of testing that helps keep strangers from checking in to your flights.

“Basically, the more elaborate the attack and the more realistic [the image], the more dangerous the attack is,” said Sébastien Marcel, head of biometrics security and privacy at Switzerland’s Idiap Research Institute.

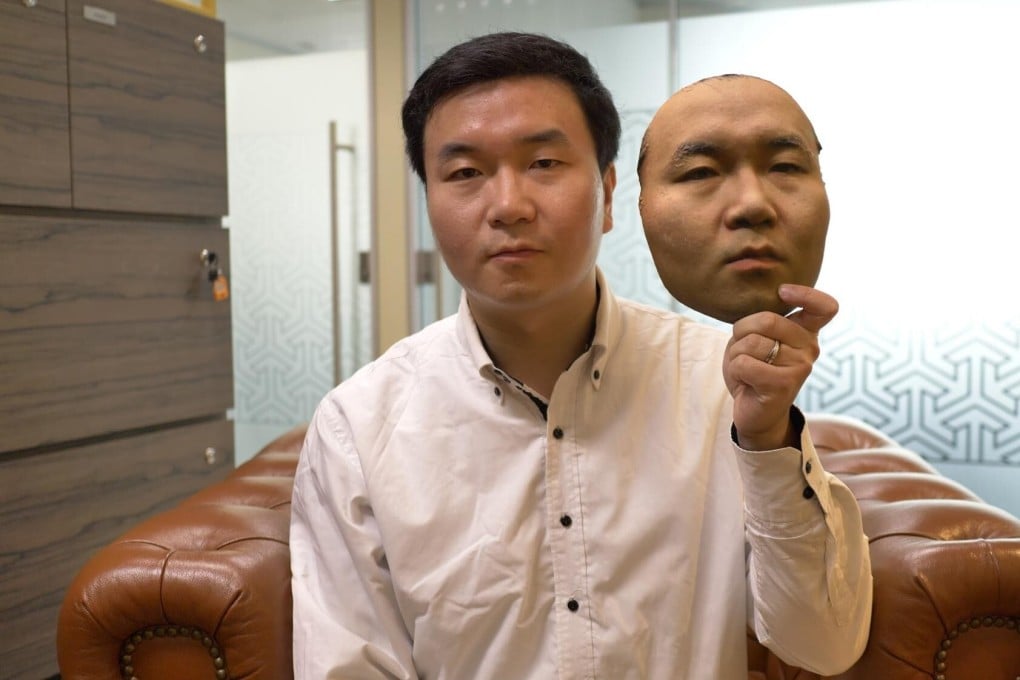

This testing has become ever more important as facial recognition shows up in everything from police surveillance to toilet paper dispensers. It allows testers to determine whether a system can distinguish between you and an imposter. To carry it out, experts “attack” the software with a fake image that could be as simple as a piece of paper with a face printed on it or as elaborate as a 3D printed model made of plastic or silicone.

If the facial recognition algorithms are doing what they’re supposed to, the facial models get even more elaborate. Beijing-based Qingfei Technologies, for instance, can recreate your face in such detail that it’s enough to fool Apple’s Face ID.