AI is challenging us to rethink the distinction between ‘real’ and ‘fake’

- By allowing that authenticity and artistic merit can be achieved through diverse means, including the integration of synthetic elements or AI help, we can reimagine the boundaries of creativity and originality

- But this paradigm shift comes with risks surrounding the discerning of truth from deception

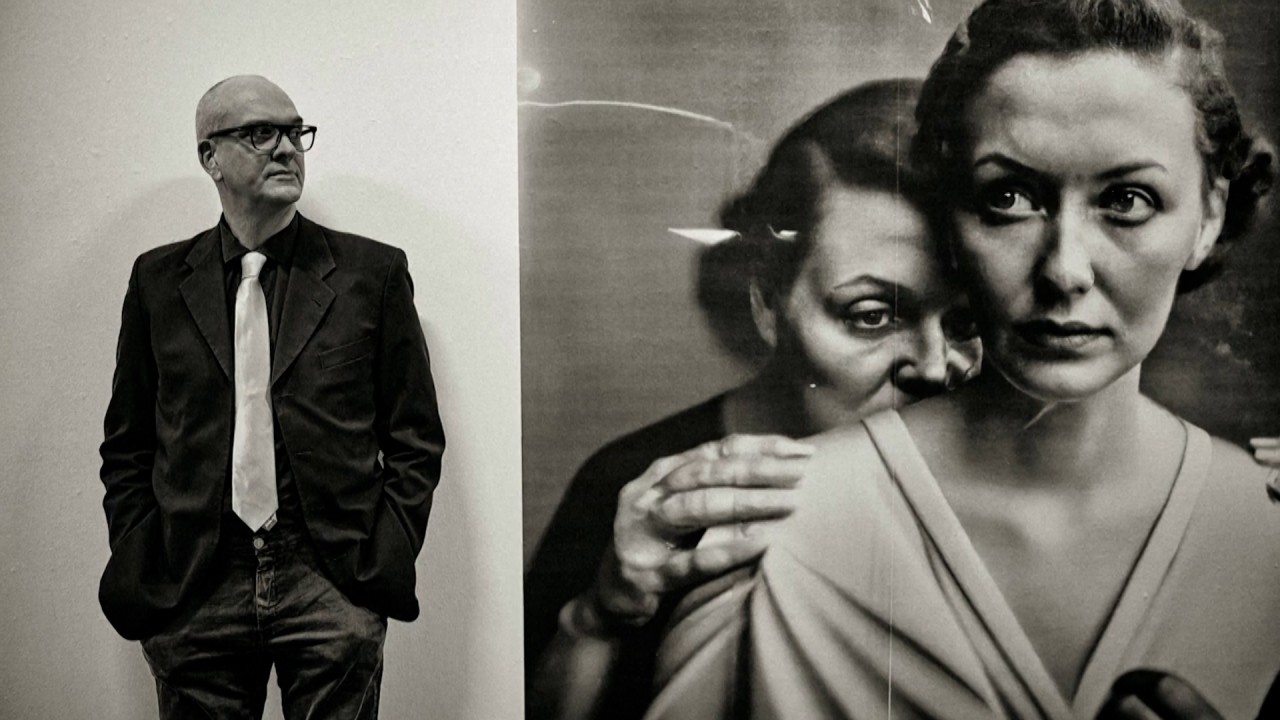

Earlier this year, The Hague’s Mauritshuis museum replaced Vermeer’s iconic Girl with a Pearl Earring with an AI-generated artwork inspired by the original. Created by Berlin-based artist Julian van Dieken using Midjourney, it fuelled a heated debate around artist agency and copyright issues.

What the text and image-generating AI models have in common is that they are constructed, or “trained”, by analysing large collections of digital images, videos, text and audio.

Creative industries affected by the transformative power of generative AI seek a balance between innovation, protection of intellectual property and the preservation of artistic integrity.

The music label said using its artists’ music to train generative AI “represents both a breach of our agreements and a violation of copyright law”. As new AI-generated hits continued to pop up, major artists joined the conversation and industry insiders explored questions such as whether music recorded by humans could become the exception rather than the rule.

But global consensus remains elusive. In Japan, for instance, the training of AI models using publicly available data is considered legally permissible, said Keiko Nagaoka, Minister for Education, Culture, Sports, Science and Technology.

AI’s advancing capabilities, which promise lower costs, faster delivery and a superior product, have brought synthetic media into the spotlight. While creating a significant market opportunity, this development also raises concerns about the erosion of authenticity.

To examine this, we must acknowledge the orthogonal nature of real vs fake and organic vs synthetic, and re-evaluate our conventional understanding of “original work”. The notion must encompass the possibilities offered by innovative tools.

By allowing that authenticity and artistic merit can be achieved through diverse means, including the integration of synthetic elements or AI help, we can reimagine the boundaries of creativity and originality.

But this paradigm shift comes with risks surrounding the discerning of truth from deception. The widespread adoption of synthetic media calls for heightened vigilance and critical thinking. Most recently, the European Union urged social media platforms to “immediately” start labelling AI-generated content as part of moves to combat fake news and disinformation.

The “fake” Drake track is a reference point for observing how our hunger for bespoke content plays out in the evolving landscape of digital creation. Given the vast depths of data lakes of our individual preferences, habits and interests, the concept of hyper-personalisation beckons.

Video game developers are designing increasingly immersive experiences. AI tools not only allow marketers to analyse a wealth of customer data, they can now create next-generation customer touchpoints with personalised content.

This next-level customisation goes beyond deeply resonating with a target audience, and appeals on a completely personal level. By leveraging AI algorithms, people can enjoy content that aligns with their distinct interests in music, film, literature or other media forms.

The content becomes a reflection of their individuality, resulting in a more engaging experience – even as content production costs decline.

But the convergence of AI-driven personalisation and our digital immersion risks narrowing our exposure to diverse perspectives and shared cultural experiences.

Such curated versions of reality could hinder serendipitous discovery and exposure to alternative viewpoints – creating an isolation where the distinction between fake and real becomes highly subjective, placing the responsibility of discernment solely on personal judgment.

Allen Day is a Google executive and spokesperson, developer advocate and creator of Google Cloud’s cryptocurrency public data sets

.png?itok=GQzUacaf&v=1687331059)