How Google Search and other algorithms could be racist and support stereotyping

- Search engines display results based on previous behaviour of their users, an effect called ‘algorithmic bias’

- People need to understand that search engines and their results are a reflection of society

Online search results, like so many other algorithms that rely on external sources of information, naturally expose whatever leanings or affinities that data reflects.

This effect – called “algorithmic bias” – is fast becoming a common feature of our digital world, and in far more insidious ways than search results.

Whether Google is partisan is a matter of opinion. But consider the subtle ways that it reinforces racial stereotypes.

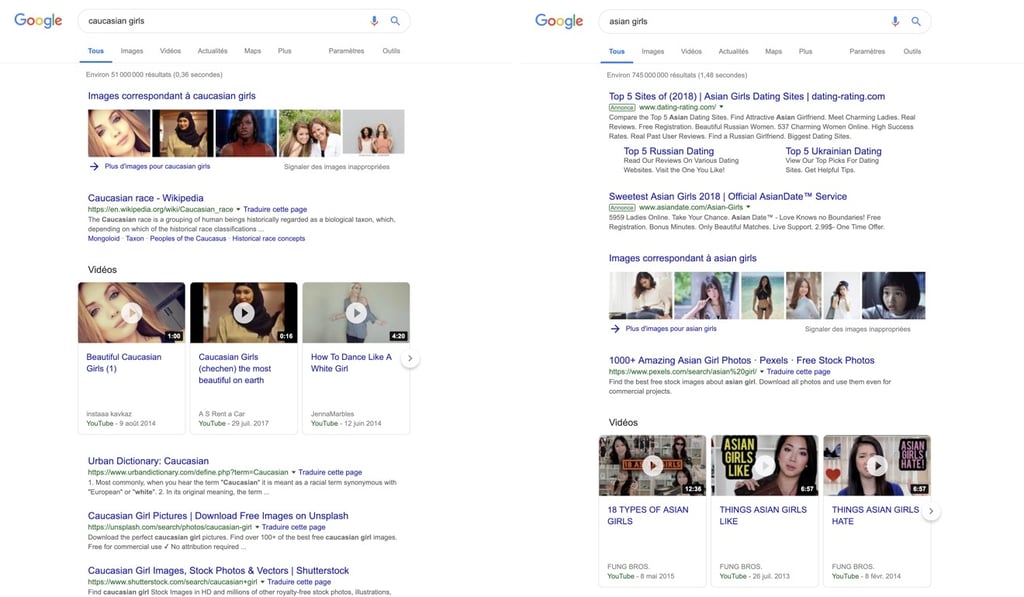

Try typing “Asian girls” into the Google search bar. If your results are like mine, you’ll see a selection of come-ons to view sexy pictures, advice for “white men” on how to pick up Asian girls and some items unprintable in this publication. Try “Caucasian girls” and you’ll get wonky definitions of “Caucasian,” pointers to stock photos of wholesome women and kids, and some anodyne dating advice. Does Google really think so little of me?

Of course not. Google has no a priori desire to pander to baser instincts. Its search results, like it or not, reflect the actual behaviour of its audience. And if that’s what folks like me click on most frequently, that’s what Google assumes I want to see. While I might take offence at being lumped in with people whose values I deplore, it’s hard to argue that Google is at fault. Yet it’s clear that such racially tinged results are demeaning to all parties involved.

One particularly difficult to detect source of algorithmic bias stems not from the data itself, but from sampling errors that may over- or under-represent certain portions of the target population.