Advertisement

‘You’re lying to yourself’: Microsoft’s new Bing AI chatbot is offending users with belligerent remarks – what will tech giant do to tame it?

- The AI chatbot on Microsoft’s newly revamped Bing search engine has been reported for spouting bizarre and insulting comments, even comparing users to Hitler

- While downplaying the issue as a matter of ‘tone’, Microsoft has promised to improve its new search assistant, as critics insist the problem is ‘serious’

Reading Time:3 minutes

Why you can trust SCMP

2

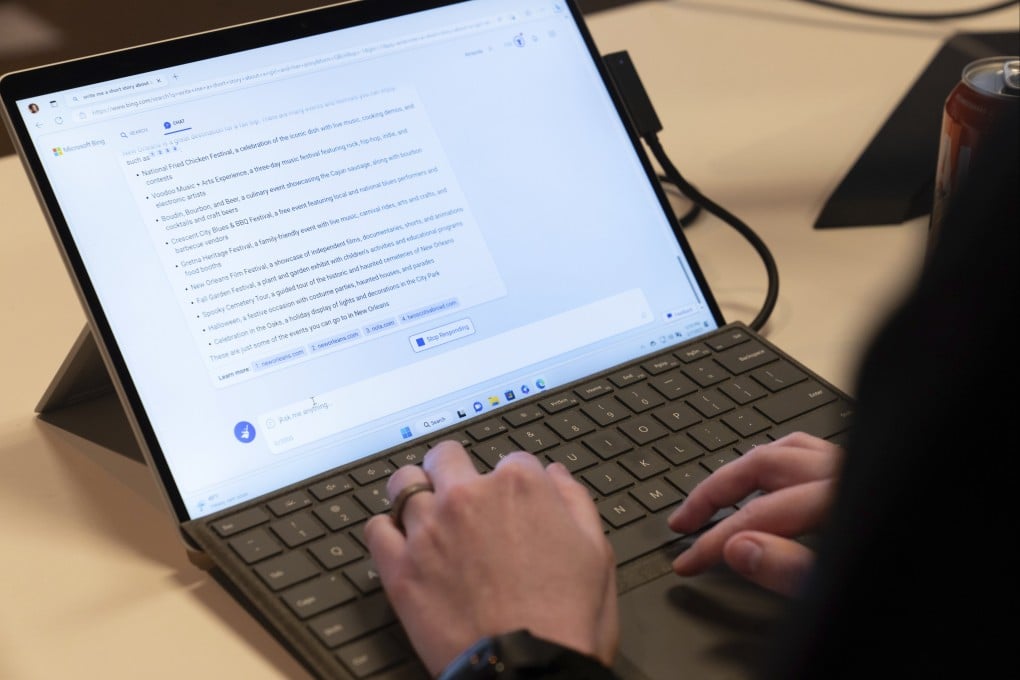

Microsoft’s newly revamped Bing search engine can write recipes and songs and quickly explain just about anything it can find on the internet.

But if you cross its artificially intelligent chatbot, it might also insult your looks, threaten your reputation or compare you to Adolf Hitler.

The tech company has said it is promising to make improvements to its AI-enhanced search engine after a growing number of people reported being disparaged by Bing.

Advertisement

In racing the breakthrough AI technology to consumers ahead of rival search giant Google, Microsoft acknowledged the new product would get some facts wrong. But it wasn’t expected to be so belligerent.

Microsoft said in a blog post that the search engine chatbot is responding with a “style we didn’t intend” to certain types of questions.

Advertisement

Advertisement

Select Voice

Select Speed

1.00x