Facial recognition software biased against Asians and black people, major US government study finds

- Tests on 189 algorithms from 99 manufacturers, who represent most of the industry, found higher number of incorrect matches for minorities than for white people

- Use of facial recognition is set to widen at airports worldwide, and travellers may decide it’s worth the trade-off in accuracy if they can save a few minutes

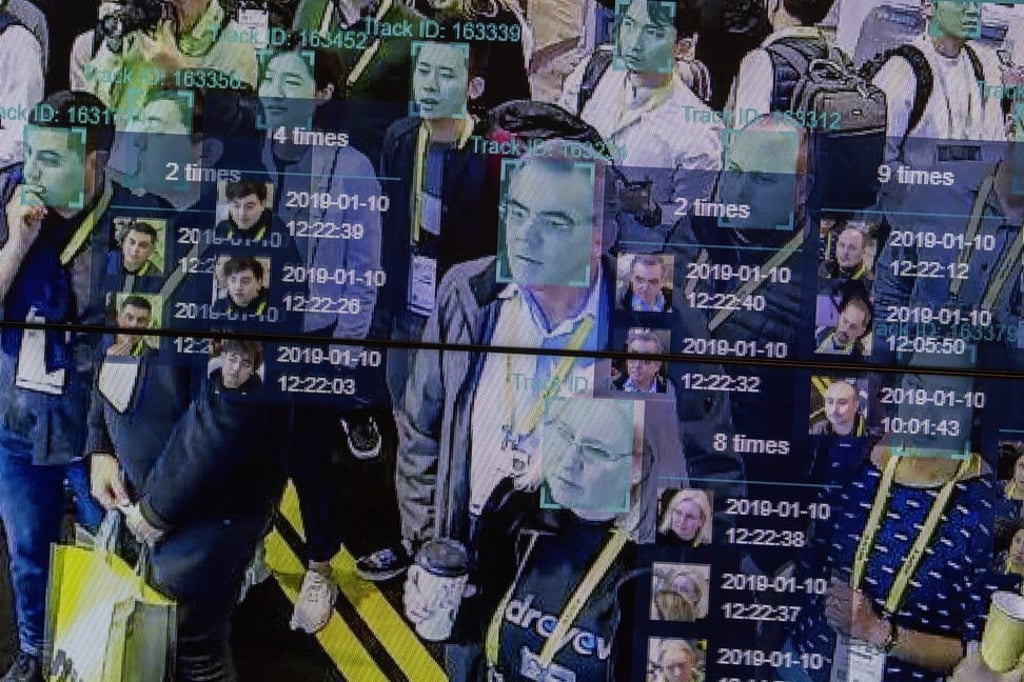

Facial recognition software has a higher rate of incorrect matches between two photos for Asian and black people relative to white people, a United States government study has found.

The evidence of bias against minorities in the software comes as its use is set to expand at airport security checkpoints in Asia, Europe and the United States.

The National Institute of Standards and Technology researchers studied the performance of 189 algorithms from 99 manufacturers representing most of the industry. Some algorithms performed better than others, they concluded, meaning that it’s likely the industry can correct the problems.

The institute found that US-developed algorithms had the highest rate of incorrect matches, or false positives, for American Indians.