Singapore seeks ‘online safety’ rules to remove or block harmful social media content

- Examples of content that could be blocked reportedly include viral social media challenges that encourage young people to perform dangerous stunts

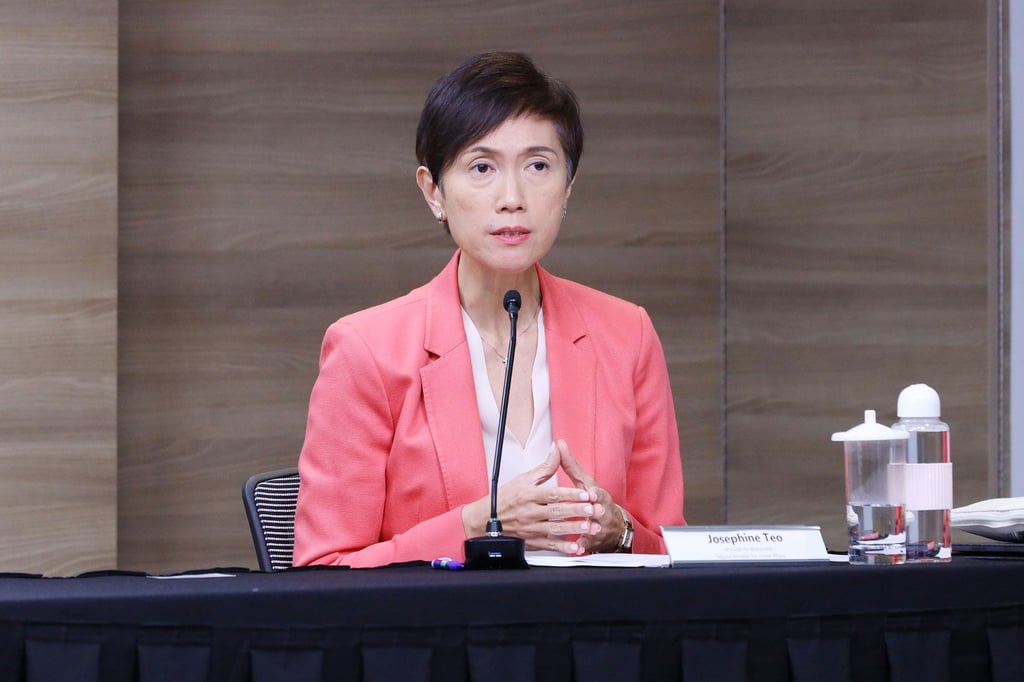

- The rules would push platforms to take greater responsibility for user safety and shield users from harmful content, said communications minister Josephine Teo

The envisioned rules also require social media platforms to implement community standards and content moderation processes to shield users from harmful content, communications minister Josephine Teo said in a Facebook post on Monday. The guidelines, which will undergo public consultation starting next month, push social media companies to take greater responsibility for user safety, she said.

Singapore has long defended the need for laws to police content on the internet, saying the island nation is especially vulnerable to fake news and misinformation campaigns given that it’s a financial hub with a multi-ethnic population that enjoys widespread internet access.

The Southeast Asian nation joins countries such as Australia, Germany and Britain, which have enacted or proposed online content and safety laws.