Ex-Google worker calls for strict regulations to stop ‘killer robots’ from starting wars or causing mass atrocities

- Laura Nolan, who resigned from Google in protest at being sent to work on military drones, briefed UN diplomats over the dangers posed by autonomous weapons

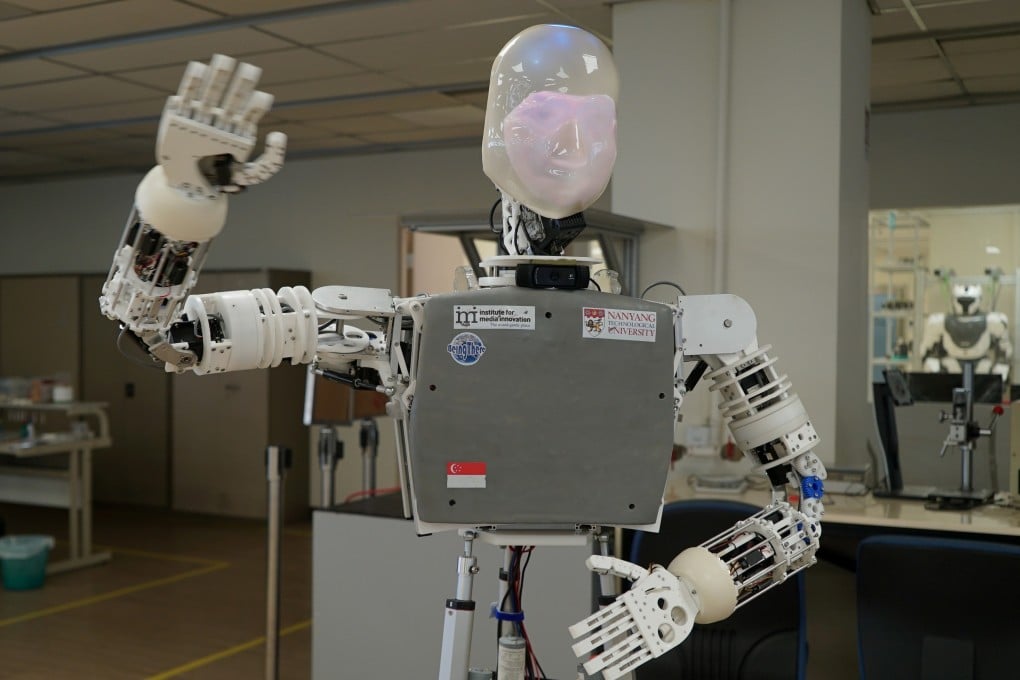

A new generation of autonomous weapons or “killer robots” could accidentally start a war or cause mass atrocities, a former top Google software engineer has warned.

Laura Nolan, who resigned from Google last year in protest at being sent to work on a project to dramatically enhance US military drone technology, has called for all AI killing machines not operated by humans to be banned.

Nolan said killer robots not guided by human remote control should be outlawed by the same type of international treaty that bans chemical weapons.

Unlike drones, which are controlled by military teams often thousands of miles away from where the flying weapon is being deployed, Nolan said killer robots have the potential to do “calamitous things that they were not originally programmed for”.

Nolan, who has joined the Campaign to Stop Killer Robots and has briefed UN diplomats in New York and Geneva over the dangers posed by autonomous weapons, said: “The likelihood of a disaster is in proportion to how many of these machines will be in a particular area at once. What you are looking at are possible atrocities and unlawful killings even under laws of warfare, especially if hundreds or thousands of these machines are deployed.

“There could be large-scale accidents because these things will start to behave in unexpected ways. Which is why any advanced weapons systems should be subject to meaningful human control, otherwise they have to be banned because they are far too unpredictable and dangerous.”