Vein-pattern recognition is the latest technology driving China’s AI, robotics revolution

- A new world order is coming, driven by Chinese companies such as DeepBlue Technology and Yitu Technology

- Artificial intelligence, vein-pattern recognition and computer vision are already being adopted across China

Some places allow you to travel back in time; historical sites and ancient buildings can transport visitors to eras past. Four floors of a brightly lit building on Shanghai’s Weining Road do the opposite, allowing the visitor a glimpse of the future. But only if they can get inside.

DeepBlue Technology, which recently placed first in the Pacific-Asia Conference on Knowledge Discovery and Data Mining machine-learning challenge and is one of China’s most innovative robotics and artificial intelligence (AI) companies, keeps its secrets well hidden, behind closed doors. And the only way to open them is with the palm of a hand. The correct hand.

An infrared light penetrates the skin and maps what lies beneath, reading the diagram drawn by a person’s veins.

Chen Haibo, DeepBlue’s founder and chief executive, says vein-pattern-recognition technology is the safest and most accurate integrated biometric system developed to date. It is also key to many other products designed in the company’s lab.

“Our veins are unique and don’t change over time,” Chen says. “So even if you registered when you were a baby, the system will recognise you as an adult, too. External features, like fingerprints or faces, can be copied and altered and raise privacy concerns.

“On Taobao [the online shopping platform owned by Alibaba, which also owns the South China Morning Post] you can buy fingerprint and facial replication systems for about 10 yuan [US$1.5] for the fingerprint and 60 yuan or so for the face. But internal features can’t be reproduced, and even if you chop my hand off [and thus stop the blood supply], the system won’t let you in with it.”

That’s why the employees of DeepBlue – surely a name chosen to engender a warm, fuzzy feeling of trust – don’t carry access cards. One of their hands is all they need to get to their work stations.

Palm scanners are also used on vending machines developed and built by DeepBlue. To demonstrate how the system works, Chen places the palm of his hand on the reader to open what looks like a simple fridge. He takes out a bottle and closes the door while a computer that has been filming him calculates what he owes and deducts it from his Alipay e-wallet.

“These autonomous vending machines, which we call TakeGo, use image recognition to detect what the customer takes. It also works with full-sized stores, where there is no need for employees nor for customers to carry cash, cards or even mobile phones to go shopping,” says Chen.

“One of the main advantages over the more traditional vending machines and unstaffed stores is that you can get a refund for whatever you don’t want to take by just placing it back.”

Vein scanning and computer vision are two of the three technologies at the heart of DeepBlue’s plans for the future. The third is AI. And all three combine in the company’s premier product: the Panda Bus.

An electric autonomous vehicle, the bus is 12 metres long and, with batteries, weighs 18 tonnes. It was launched in January and could drive any route on its own.

It doesn’t yet do so because regulations in China require a human to watch over the bus’ every move, as they do in the seven cities in which it is in operation: Changzhou and Xuzhou (Jiangsu province), Chizhou (Anhui), Deyang (Sichuan), Jinan (Shandong), Ganjiang (Jiangxi) and Tianjin. Users don’t require a ticket to get on: if they have registered with the operating body, they can scan a palm as they board.

Chen, however, believes there is an even more interesting facet to this vehicle, which does look disconcertingly like a panda: “It can turn public transport, which is usually a loss-making enterprise, into a profitable business.”

How? “By combining TakeGo retail machines installed in each bus and smart advertising,” he says. “Our bus is equipped with eight screens and eyeball-tracking cameras. These cameras can interpret people’s reactions while watching ads. This allows operators to charge companies according to the time people are actually looking at their ads, give them feedback, and therefore increase revenue.”

Chen calculates that each Panda Bus could generate up to 20,000 yuan (US$2,970) per month from TakeGo vending machines sales and up to 100,000 yuan (US$14,830) per month through the smart advertising system.

“Technology and research by themselves are not very useful. The only viable way forward is to turn [buses] into profitable products,” he says. “Most Chinese AI companies come from very theoretical backgrounds, but we believe in practical applications. We do research, develop products and manufacture them all in our Changzhou factory.”

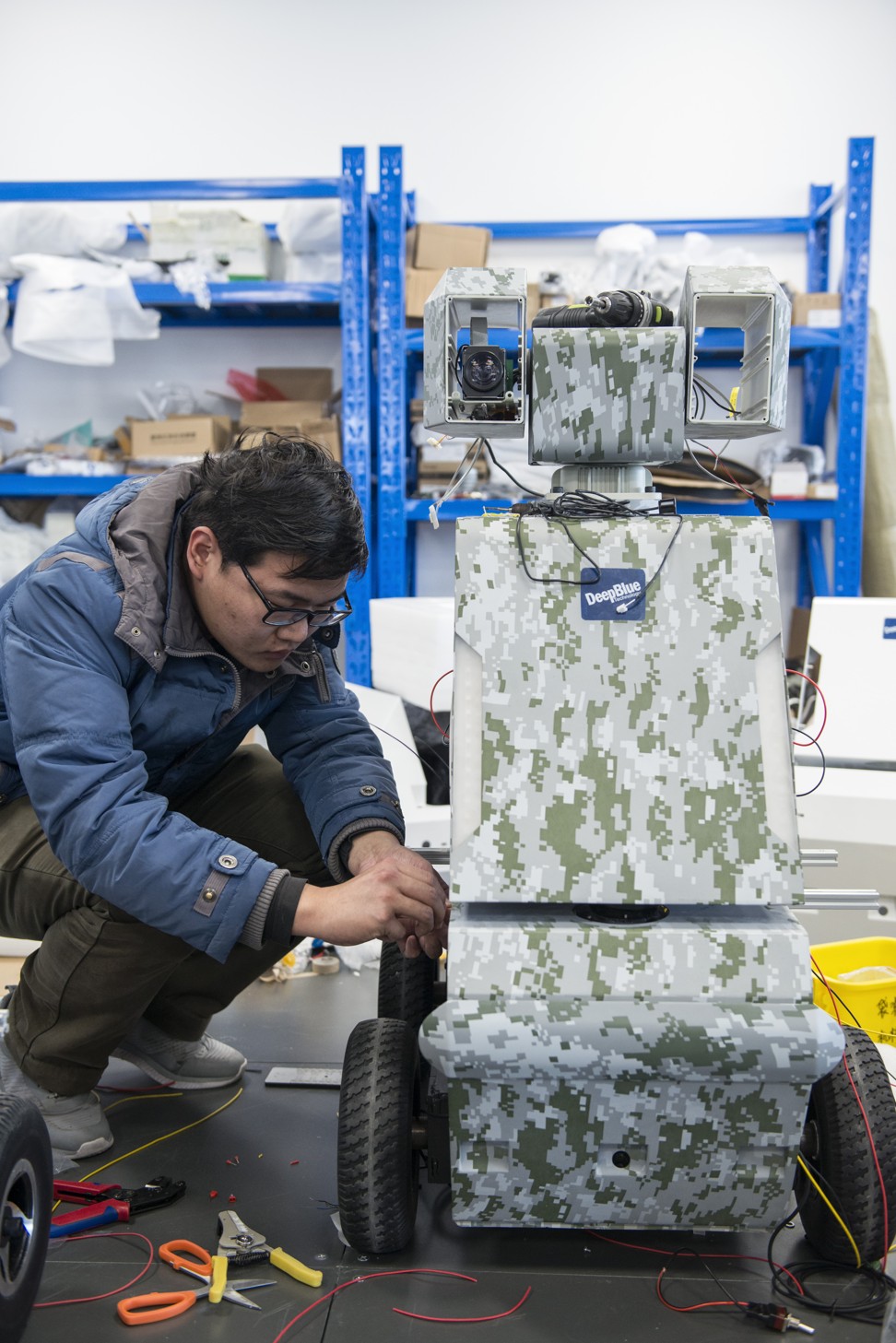

To reveal what else DeepBlue is up to, Chen agrees to let Post Magazine inside the brain of the company: the lab where prototypes are developed. The brightly lit room occupies half of the ninth floor of the futuristic skyscraper. Scattered everywhere are cables, chips, sensors, robot parts and all kinds of tools.

An engineer tests a vending machine designed to move down the aisles of high-speed trains. A thousand units ordered by Coca-Cola are to be rolled out to offer drinks to passengers in the same way stewardesses have been doing. Another employee is writing code onto a black screen.

He is designing an algorithm that will guide a dustbin as it sorts waste for recycling, we are told. A third engineer fine-tunes a tobacco vending machine, to enable it to be able to tell whether a potential customer is over the age of 18.

“It can use vein technology to assess age, but there is a two-year error range, so it can require a user to swipe an NFC [near-field communication] enabled ID card if in doubt,” says Chen.

A few robots roam around. Some, in humanoid shapes, have been designed to offer all kinds of information, and engineers are testing their behaviour in low-light environments. Others look like vehicles and are built to clean or deliver packages.

DeepBlue’s “robocop” looks nothing like the Hollywood version. It wears metal armour in a camouflage pattern and moves on wheels, not legs. It uses computer-vision cameras to see, but it wouldn’t go blind if they were all broken. The machine can, at any time, access feeds from up to 50 nearby CCTV cameras.

“If there is an intrusion or riot, the robot can understand what is happening because it can interpret the visual input,” says Chen, with obvious pride. “Traditional computer-vision technology would, for example, be able to tell that there were four people in an image, and identify who they are.

“But we want to go a step further and make technology describe the images, so it would know that there are three males and a female, what they are wearing and what they are doing. These are intuitive things for a human, but it’s a very hard thing for a computer to do.”

In theory, these robots should be able to interpret the behaviour of miscreants and, Chen is keen to point out, his robocops won’t be affected by emotions the way a police officer may be. But that doesn’t mean they won’t make mistakes.

“Because we programme machines, they will react the way we do. The best AI is that which behaves closest to the human way,” Chen says.

“We have just received a large order from the Chinese authorities for search and patrol robots. They are already deployed in schools, factories, parks and tourist places.” DeepBlue has also developed a tougher version, armed with a taser, which has seen interest from local and foreign governments.

Chen prefers to highlight more peaceful robots. Especially those designed to save lives: “They can increase the safety of workers. For example, if there is a fire, they will be the first responder and help assess and mitigate the dangers.”

A common feature of DeepBlue’s products is that once deployed they require no further human intervention.

With a rapidly growing number of autonomous stores, vehicles and service robots, and with a solid commitment from governments and corporations to accelerate the development of this kind of technology, many wonder whether humans are being rendered redundant.

Technology will lead to the loss of many jobs, either because of an increase in productivity [of workers being assisted by machines] or because machines take the place of humans [altogether]. We have to prepare for the end of manual labour

“A century ago, factories were full of people because there were almost no machines,” Chen says. “Fast forward 100 years; now we have machines and the [population] has increased several fold. But unemployment is not higher than it was then. And what has happened to the efficiency of the global population? It may surprise you, but it has actually decreased.

“If we look forward another century, we will see machines taking over many of the tasks humans do, so humans can concentrate on other things.”

Chen envisages a world in which humans focus on innovation. “Mopping the floor is a very standard circular motion. Why do we need a human to do it? If we get a machine to do it, this person can do something more meaningful. We have been used as machines in the past, so we will take our rightful place; we will be able to fully develop our human prowess.”

But not everybody is able to write code or paint a masterpiece. “Society must advance, even if that means hurting a part of that society that loses out. We have to look out for the larger group of people who will benefit from it,” says Chen, who believes the world of tomorrow will be different from the one we live in today in ways we can’t even imagine.

At rival firm Yitu Technology, chief scientist Wu Shuang is not quite as optimistic.

“Technology will lead to the loss of many jobs, either because of an increase in productivity [of workers being assisted by machines] or because machines take the place of humans [altogether],” Wu says during an interview in Shanghai.

“We have to prepare for the end of manual labour. But new jobs we now can’t even think of will be created. The big question is whether the latter can offset the effect of the former.”

On the other hand, “this is not a transition we haven’t done before”, he says. “We went from an agricultural society to an industrial one. Nowadays, services make up most jobs. The difference is that we need to focus on creativity and invest heavily in education from now on.”

Robin Li Yanhong, founder and chief executive of search engine Baidu, spoke along the same lines at the World Artificial Intelligence Conference, in Shanghai, in September: “Workers will have to keep updating their knowledge to avoid becoming obsolete.”

And it’s not only blue-collar jobs that will be lost. Yitu is developing advanced AI medical diagnosis systems that, the company believes, will replace doctors in many instances.

Perhaps for my benefit, Chen adds writers to the list of endangered professionals: “They have various levels of ability. A report or a simple composition can be written by machines, but not a piece that requires consciousness, innovation and inspiration.”

Last week, DeepVogue, an AI design system created by DeepBlue Technology, competed against 15 human teams at the China International Fashion Design Innovation Competition, finishing as a runner-up.

Indian-American computer scientist Raj Reddy, winner of the 1994 Turing Award, the Nobel Prize for computing, fears robotics and AI “may be used to strengthen despotic governments” and will deepen social disparity between those who own the technology and those who don’t.

“Technology is neutral, but the people who use it are not,” Wu points out.

“The focus of our time and our energy should be put into how we can coexist with machines,” says Chen. “Machines increase productivity. This means an increase in profit, too. And more taxes paid as a result.”

Wu believes that taxing machines could result in a universal basic salary, with robots financially supporting humans. But will they get fed up of doing so? Will artificial intelligence ever surpass our own?

Chen and Wu both believe it will.

“Humans haven’t evolved biologically much in the past 200 years, but why have we improved so much? Because of technology that allows us to create changes in our environment,” Chen says. “AI will be smarter than us because not even in thousands of years have we evolved biologically as much as technology has advanced in just a couple of centuries.

In the future, the machine equivalent of a human’s computing ability could be cheaper than the minimum wage. Machines may look at us the way we look at ants; we just don’t pay any attention to them

“We thought human intelligence could never be copied, much less replaced. We are still very far from that because AI is not yet able to reason, but we need to understand that human intelligence is not irreplaceable. Machines will eventually make decisions, and do so better than us.”

Pieter Abbeel, co-director of the Artificial Intelligence Research Lab at the University of California, Berkeley, in the United States, has calculated what it would take to replicate the computing power of a human brain.

“It’s a petaflop [a quadrillion calculations per second],” he announced at the Shanghai conference. “There is currently no machine with such computing power, but we will be able to use the cloud to achieve it using a network of computers.”

Chen has put a time frame on developments: “The computational ability of a machine increases exponentially every year, and machines are getting smaller and smaller. A human brain has around 30 billion neurons. In about two years a chip will surpass this capability. In China, we have already reached the equivalent of 8 billion neurons.”

Abbeel gave an unnerving forecast: “In the future, the machine equivalent of a human’s computing ability could be cheaper than the minimum wage.

“Machines may look at us the way we look at ants; we just don’t pay any attention to them.” That’s why the professor is calling for a global debate about the future of AI. “We need to make sure it serves our interests.”

People such as the late physicist Stephen Hawking and businessman Elon Musk have warned about the danger AI poses for the human species, but Chen shrugs off such worries: “Chips may surpass human imagination.

But we should not look at this scenario as a mutually exclusive one, where robots take over humans, but as a complementary one, where robots complete our abilities.”

Chen believes technology may allow humans to live forever, albeit not in a physical form.

“We will be able to use machines to help preserve our cognitive abilities,” he says. “And I hope that technology will eventually allow me to download my consciousness and identity into a machine that can be a delivery bot, for example.

“When we have to give up our body, we will find another way to exist. Maybe I will see you after 300 years. Maybe I will be able to talk to my great-great-great-grandson through some program.”

With just such a conversation in mind, DeepBlue is developing a brain-computer interface, a way of controlling machines using the mind. Currently, it works only with simple commands. For example, a user can make the falling pieces in a Tetris game turn and move using brain waves.

“The future is made up of human consciousness, which can’t be replicated because it’s unique to each individual, and the computational ability of machines,” says Chen.

But will that superintelligence be free of human bias?

“At the moment,” Wu says, “there are no unbiased algorithms. It’s technically impossible because there will always be some scenarios in which they don’t perform well. This means they will be discriminatory. And that’s why we believe technology will be prejudiced.

“Take the example of facial recognition. When we develop these systems, we know they won’t work well with certain groups of people. Kids have softer features and are harder to identify. People can understand and accept that. But if the algorithm can’t recognise black people, people will call it racist.”

Back in the present, China and the US fight for world hegemony, and Beijing wants technology to tip the scales in its favour.

“Historically, superpowers have clashed,” Chen says. “The established empire will have frictions with the rising one. It happened to the Romans, to the Spanish, to the English. The world should understand that China is now at the same level as America. This is good because we are getting a higher level of respect from the international community and China can make the world a more balanced place.”

Chinese citizens, Wu argues, are embracing technology faster and more willingly than Westerners because it has brought positive changes since the country opened up.

“In contrast, places like Europe have seen an erosion in their welfare state and, therefore, people there are wary.”

In the AI era, privacy is also a concern.

“If we have made headway in Europe it is because our technologies are compliant with the privacy laws there. We believe that each region has its rules and there is no right or wrong. Some people think Europeans are too strict in this regard, but I understand their point of view,” Chen says.

“I believe that companies should not have the right to store external biometric data of citizens. We use palm technology instead and have already got approval from different governments, including those of Greece, Italy and Germany.

“No data of our foreign users will be stored in Chinese servers,” promises the DeepBlue chief executive.

That’s a promise we shouldn’t assume the machines will keep once they have started to treat humans like ants.