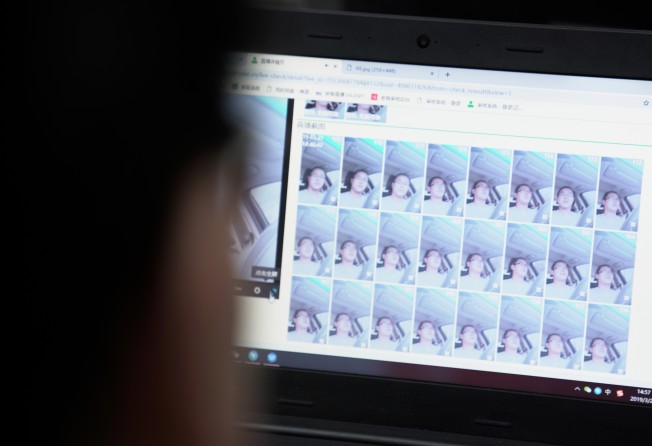

In the Philippines, content moderators at YouTube, Facebook and Twitter see some of the web’s darkest content

- In the last couple of years, social media companies have created tens of thousands of jobs around the world to vet and delete violent or offensive content

A year after quitting his job reviewing some of the most gruesome content the internet has to offer, Lester prays every week that the images he saw can be erased from his mind.

First as a contractor for YouTube and then for Twitter, he worked on a high-up floor of a mall in the Philippine capital, Manila, where he spent up to nine hours each day weighing questions about the details in those images.

Print option is available for subscribers only.

SUBSCRIBE NOW