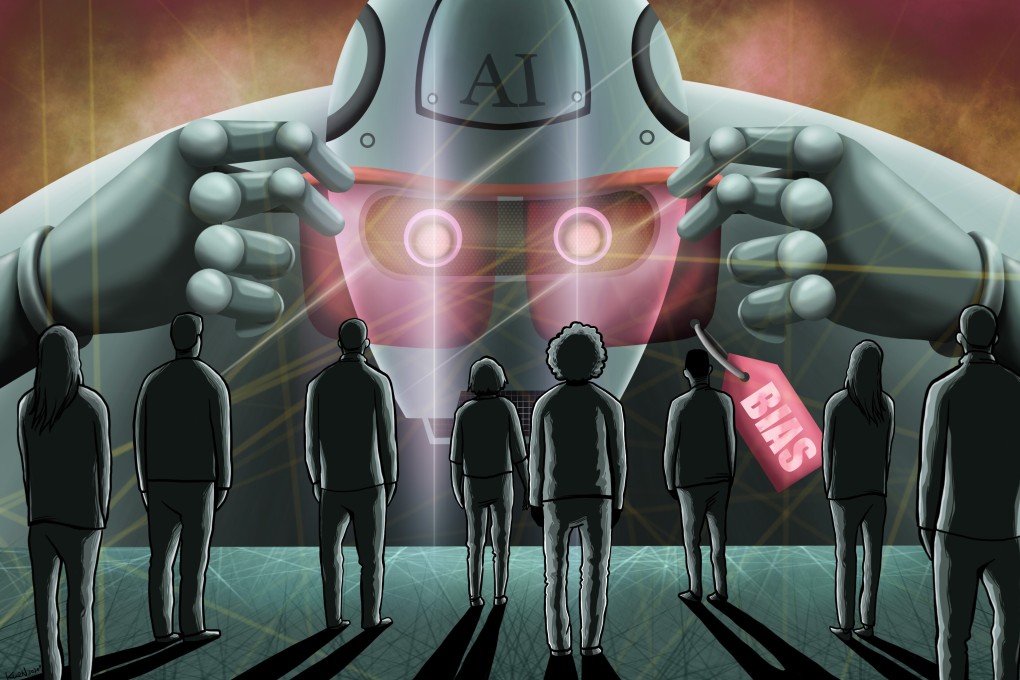

Sexist and racist AI can result in wrongful arrests, fewer job opportunities or even death. Now, more are taking notice

- Researchers have found that social biases can be amplified in AI algorithms that determine access to jobs, health care diagnosis outcomes and more

- Society can only achieve fair AI outcomes if it works as a whole, not just with the effort of a few individuals, one expert says

Three years ago, while testing an artificial intelligence model for automatically labelling images from the web, Jieyu Zhao spotted a trend: it repeatedly associated images of people in kitchens with women, even when they showed men.

Suspecting social biases might play a part, Zhao, then a PhD candidate at the University of Virginia, teamed up with several peers to investigate.

Their findings, published in a 2017 paper, were shocking. Even though images used to train the model showed 33 per cent more women than men in kitchens, the AI more than doubled this disparity to 68 per cent.

“Structured prediction models can leverage correlations that allow them to make correct predictions even with very little underlying evidence,” the researchers wrote. “Yet such models risk potentially leveraging social bias in their training data.”

But so what if a machine mislabels an image of a man cooking as a woman, or a woman brandishing a gun as a man? Such mistakes might not seem like a big deal, but as AI becomes integrated into more aspects of daily life, they can have real implications for many people.

Zhao, now pursuing her PhD at the University of California, Los Angeles, is among a growing group of researchers worldwide questioning how social biases, especially those related to gender and race, can be amplified through machine learning systems affecting access to jobs and credit, health care diagnosis outcomes, jail time for criminals and more.